Keli Yerian, Distinguished Teaching Professor in Linguistics, launched an AI Health Check for faculty teaching mid- to large-sized Core Education (CoreEd) courses in the Humanities and Social Sciences. This service evaluates how vulnerable course assignments may be to misuse by generative AI tools.

This collaborative effort involved undergraduate team members—David Mitrovcan Morgan (Data Science & Economics), Ethan Robb (Data Science), and Angela Winn (Computer Science)—who worked with Dr. Yerian to identify the susceptibility of assignments to AI use. In Spring 2025, the team generated 24 reports for 17 faculty members.

Evaluation Model

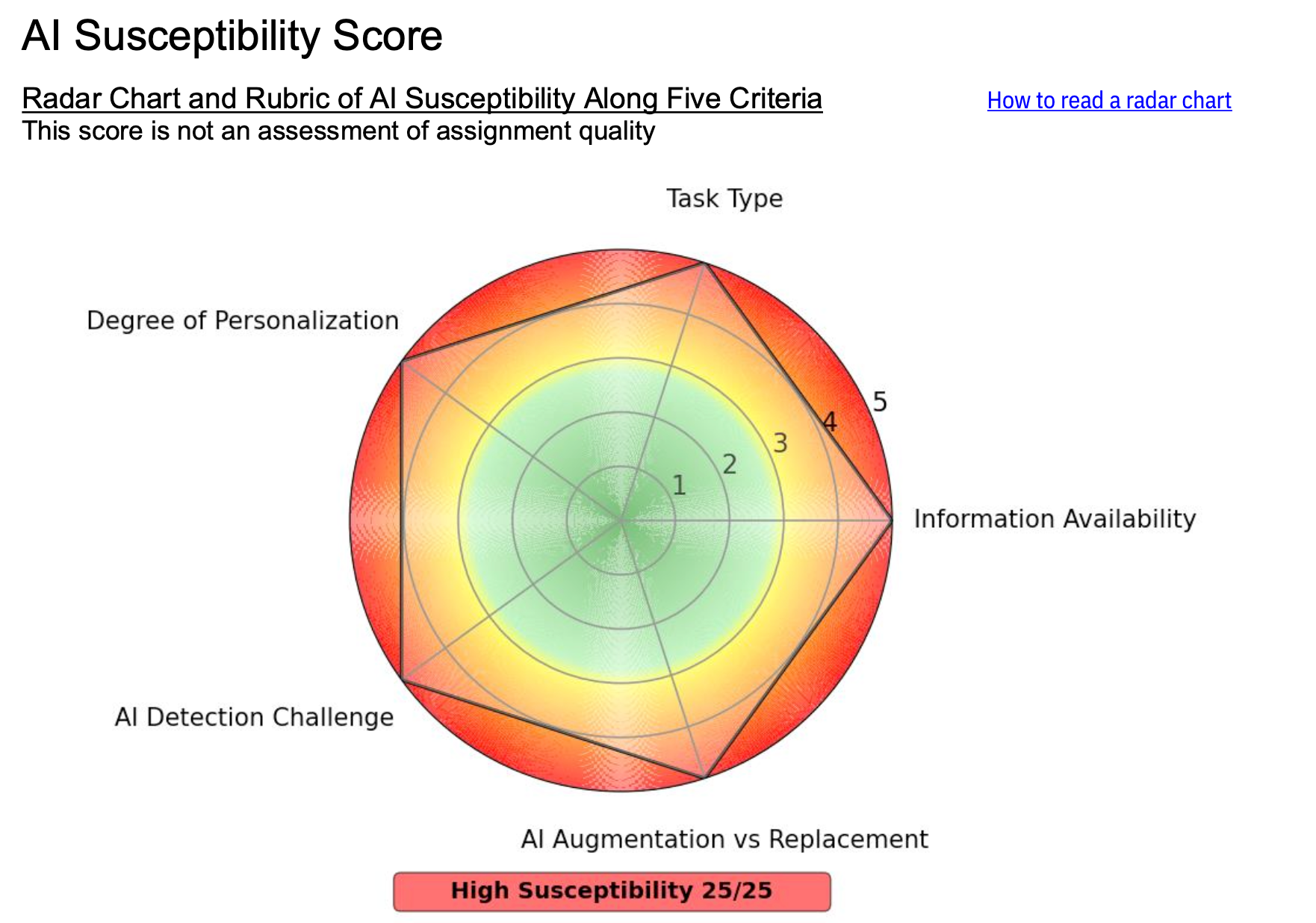

The team developed a model based on five criteria, offering each assignment a score out of 25 to indicate its vulnerability to AI. Lower scores indicate greater resistance to misuse; higher scores show susceptibility to AI misuse.

- Task Type: How easily AI can complete the type of task.

- Information Availability: How easily AI can access needed content.

- AI Augmentation vs. Replacement: Whether AI enhances or replaces student work.

- AI Detection Challenge: How hard it is to distinguish AI-generated work.

- Degree of Personalization: How much personal input, experience, or choice is required.

Report Components

Each report included:

- A vulnerability rating across the five criteria. (with a radar chart and susceptibility score)

- Sample AI-generated responses to the assignment.

- Recommendations for reducing vulnerability and, where appropriate, adapting the assignment to promote ethical, reflective AI use.

- An invitation for follow-up consultations with the AI Health Check team or the Teaching Engagement Program (TEP).

Key Takeaways

High-stakes assignments with high vulnerability may unintentionally encourage misuse of AI, potentially creating peer pressure for others to do the same.

- Educators must reflect on what to assess and how to assess learning in this evolving AI landscape.

- You might ask:

- What knowledge should students retain without relying on tools?

- Which skills should they master both with and without AI?

- Which modalities (e.g., written, visual, oral) are critical for authentic learning in the assignment?

The AI Health Check did not assess overall quality. Instead, it created “productive friction” that prevents students from offloading essential thinking to AI tools, while offering practical strategies to reduce misuse and support authentic learning.

Insights on AI & Learning

- Students, for the most part, want to learn.

- They appreciate thoughtful efforts to keep learning meaningful in this new environment.

- Human learning communities remain as important as ever.

(Re)thinking Assessments with AI in Mind

This self-check resource invites instructors to reflect on how they design, evaluate, and communicate their assessments in the age of GenAI. It’s organized into four key areas:

Assess Your Assignments

Run assignments through AI tools like ChatGPT, CoPilot, or Gemini to see what they can produce. Use iterative prompts to test how AI interprets assignment instructions and rubrics.

Reassess Your Learning Goals

Reflect on what students need to know or do independently of AI vs. in collaboration with AI. Consider how GenAI is used in your field and what may no longer need to be taught or assessed in the same way.

Make Informed, Intentional Choices

Decide which assessments should allow or discourage AI. Redesign vulnerable assignments with clarity and intentionality.

Communicate Transparently

Clearly state whether and how students can use AI. Share your reasoning and ethical considerations to support trust and engagement.

Sample Strategies Included

If You Discourage AI Use: Emphasize in-class work, require students to complete process-oriented steps, or use oral exams to check understanding. You might also include flipped learning or reflective writing, or use more low-stakes assessments to build student confidence and skills.

If You Encourage AI Use: Guide students in how to use AI ethically and effectively. Ask them to show their process, cite tools they used, and reflect on the limits of AI’s output. Consider redesigning tasks to go beyond what AI can do, especially in terms of audience, creativity, or lived experiences.

For All Assignments: Focus on authentic engagement, human connection, and creativity. Design assignments that emphasize process over product, allow for personalization, and invite students to work with real-world audiences or meaningful contexts.